|

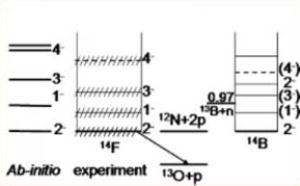

This graph shows the flourine-14 supercomputer predictions (far-left) and experimental results (center). The striking similarities between these graphs indicate that researchers are gaining a better understanding of the precise laws that govern the strong force.

[Credit: James Vary] |

|

This is the K500 superconducting cyclotron at Texas A&M University that achieved the first sightings of flourine-14.

[Credit: Robert Tribble/Texas A&M Cyclotron Institute] |

"This is a true testament to the predictive power of the underlying theory," says Vary. "When we published our theory a year ago, fluorine-14 had never been observed experimentally. In fact, our theory helped the team secure time on their newly commissioned cyclotron to conduct their experiment. Once their work was done, they saw virtually perfect agreement with our theory."

He notes that the ability to reliably predict the properties of exotic nuclei with supercomputers helps pave the way for researchers to cost-effectively improve designs of nuclear reactors, to predict results from next generation accelerator experiments that will produce rare and exotic isotopes, as well as to better understand phenomena such as supernovae and neutron stars.

"We will never be able to travel to a neutron star and study it up close, so the only way to gain insights into its behavior is to understand how exotic nuclei like fluorine-14 behave and scale up," says Vary.

Developing a Computer Code to Simulate the Strong Force

Including fluorine-14, researchers have so far discovered about 3,000 nuclei in laboratory experiments and suspect that 6,000 more could still be created and studied. Understanding the properties of these nuclei will give researchers insights into the strong force, which could in turn be applied to develop and improve future energy sources.

With these goals in mind, the Department of Energy's Scientific Discovery through Advanced Computing (SciDAC) program brought together teams of theoretical physicists, applied mathematicians, computer scientists and students from universities and national laboratories to create a computational project called the Universal Nuclear Energy Density Functional (UNEDF), which uses supercomputers to predict and understand behavior of a wide range of nuclei, including their reactions, and to quantify uncertainties. In fact, fluorine-14 was simulated with a code called Many Fermion Dynamics–nuclear (MFDn) that is part of the UNEDF project.

According to Vary, much of this code was developed on NERSC systems over the past two decades. "We started by calculating how two or three neutrons and protons interact, then built up our interactions from there to predict the properties of exotic nuclei like fluorine-14 with nine protons and five neutrons," says Vary. "We actually had these capabilities for some time, but were waiting for computing power to catch up. It wasn't until the past three or four years that computing power became available to make the runs."

Through the SciDAC program, Vary's team partnered with Ng and other scientists in Berkeley Lab's CRD who brought discrete and numerical mathematics expertise to improve a number of aspects in the code. "The prediction of fluorine-14 would not have been possible without SciDAC. Before our collaboration, the code had some bottlenecks, so performance was an issue," says Esmond Ng, who heads Berkeley Lab's Scientific Computing Group. Vary and Ng lead teams that are part of the UNEDF collaboration.

"We would not have been able to solve this problem without help from Esmond and the Berkeley Lab collaborators, or the initial investment from NERSC, which gave us the computational resources to develop and improve our code," says Vary. "It just would have taken too long. These contributions improved performance by a factor of three and helped us get more precise numbers."

He notes that a single simulation of fluorine-14 would have taken 18 hours on 30,000 processor cores, without the improvements implemented with the Berkeley Lab team's help. However, thanks to the SciDAC collaboration, each final run required only 6 hours on 30,000 processors. The final runs were performed on the Jaguar system at the Oak Ridge Leadership Computing Facility with an Innovative and Novel Computational Impact on Theory and Experiment (INCITE) allocation from the Department of Energy's Office of Advanced Scientific Computing Research (ASCR).